GANtlitz: Ultra High Resolution Generative Model for Multi-Modal Face Textures

1ETH Zurich, Switzerland

2Google

* We recommend using the Chrome or Safari browser to correctly display all visuals. Not optimized for mobile, view on desktop.

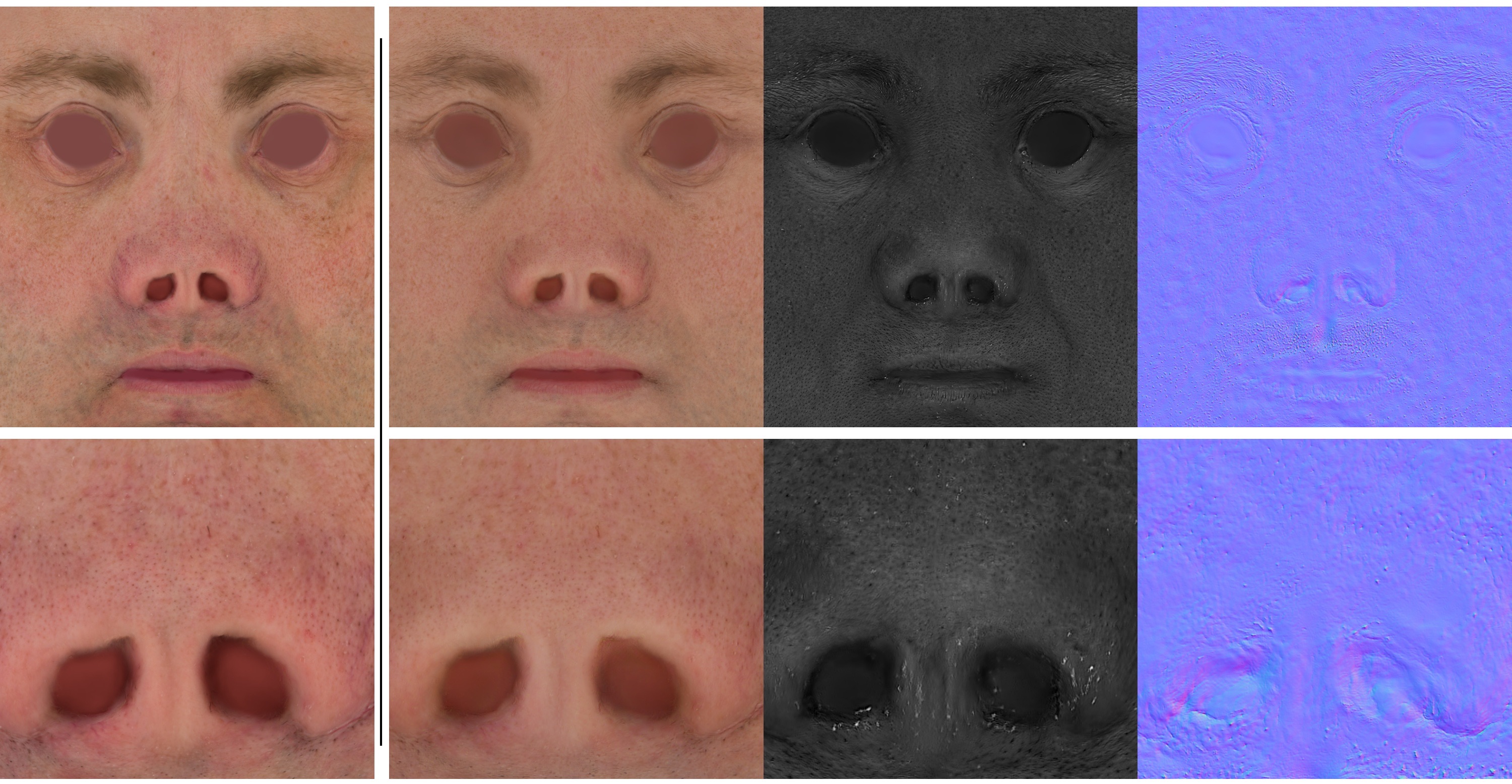

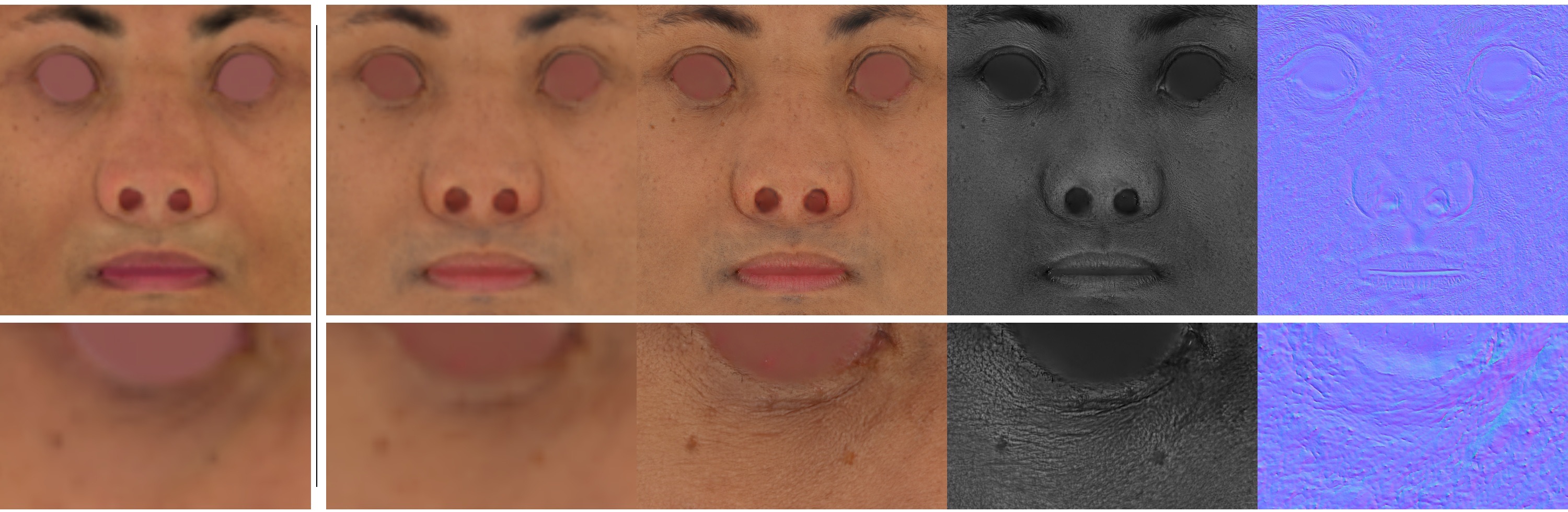

The StyleGAN Architecture additionally enables the resampling of the noise map inputs. while the coarse structure is preserved, novel variation of wrinkle and freckle patterns appear.

(Click to sample noise, double-click to cycle zoom levels.)

@inproceedings{gruber2024gantlitz,

title={GANtlitz: Ultra High Resolution Generative Model for Multi-Modal Face Textures},

author={Aurel Gruber and Edo Collins and Abhimitra Meka and Franziska Mueller and

Kripasindhu Sarkar and Sergio Orts-Escolano and Luca Prasso and Jay Busch and

Markus Gross and Thabo Beeler},

booktitle={Computer Graphics Forum},

year={2024}

}