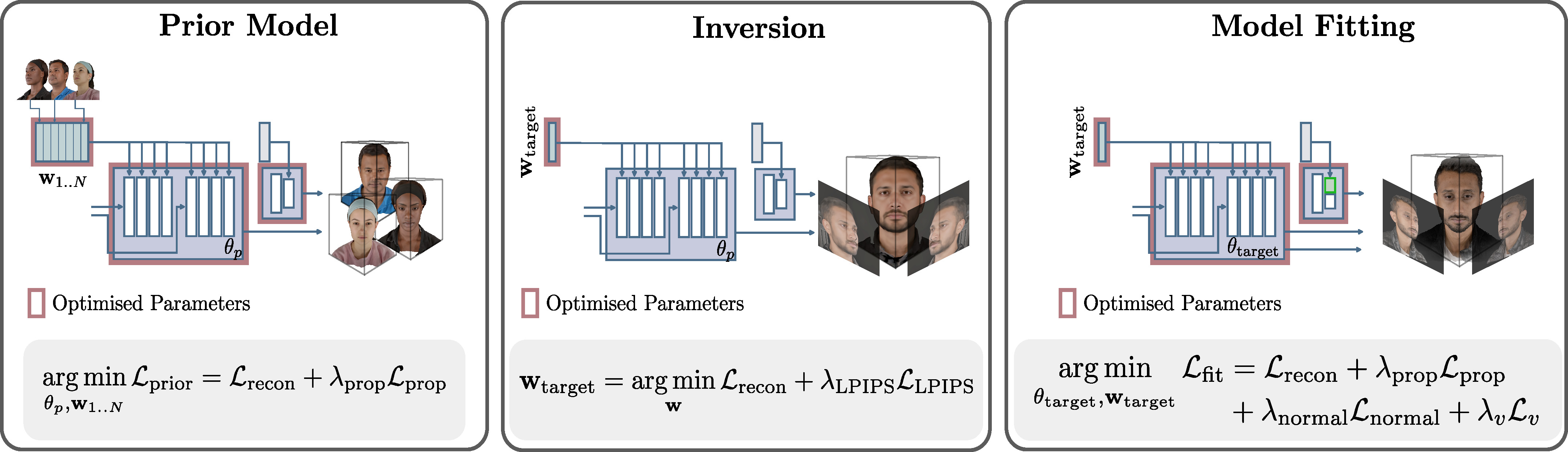

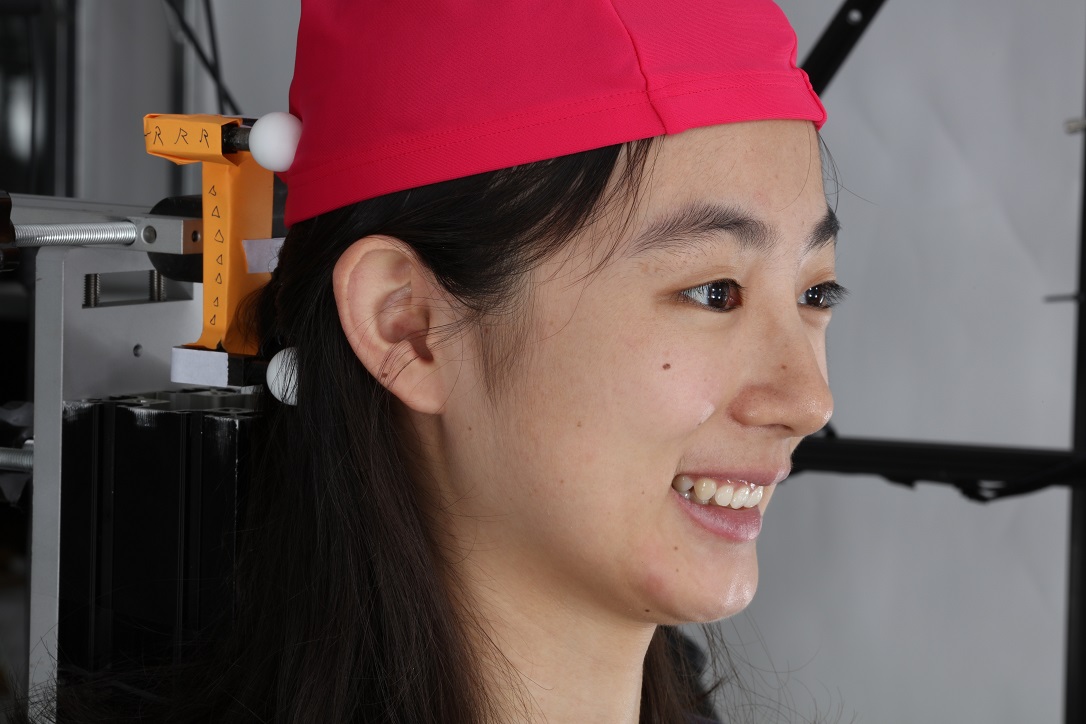

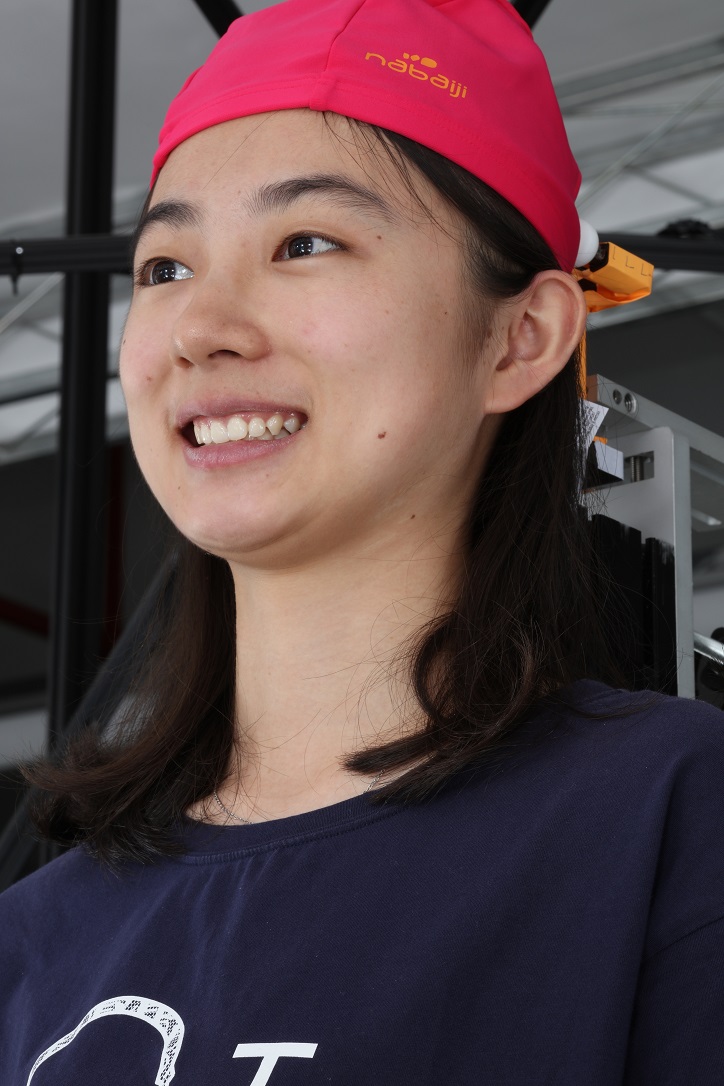

Ultra High-resolution Synthesis from Studio Captures

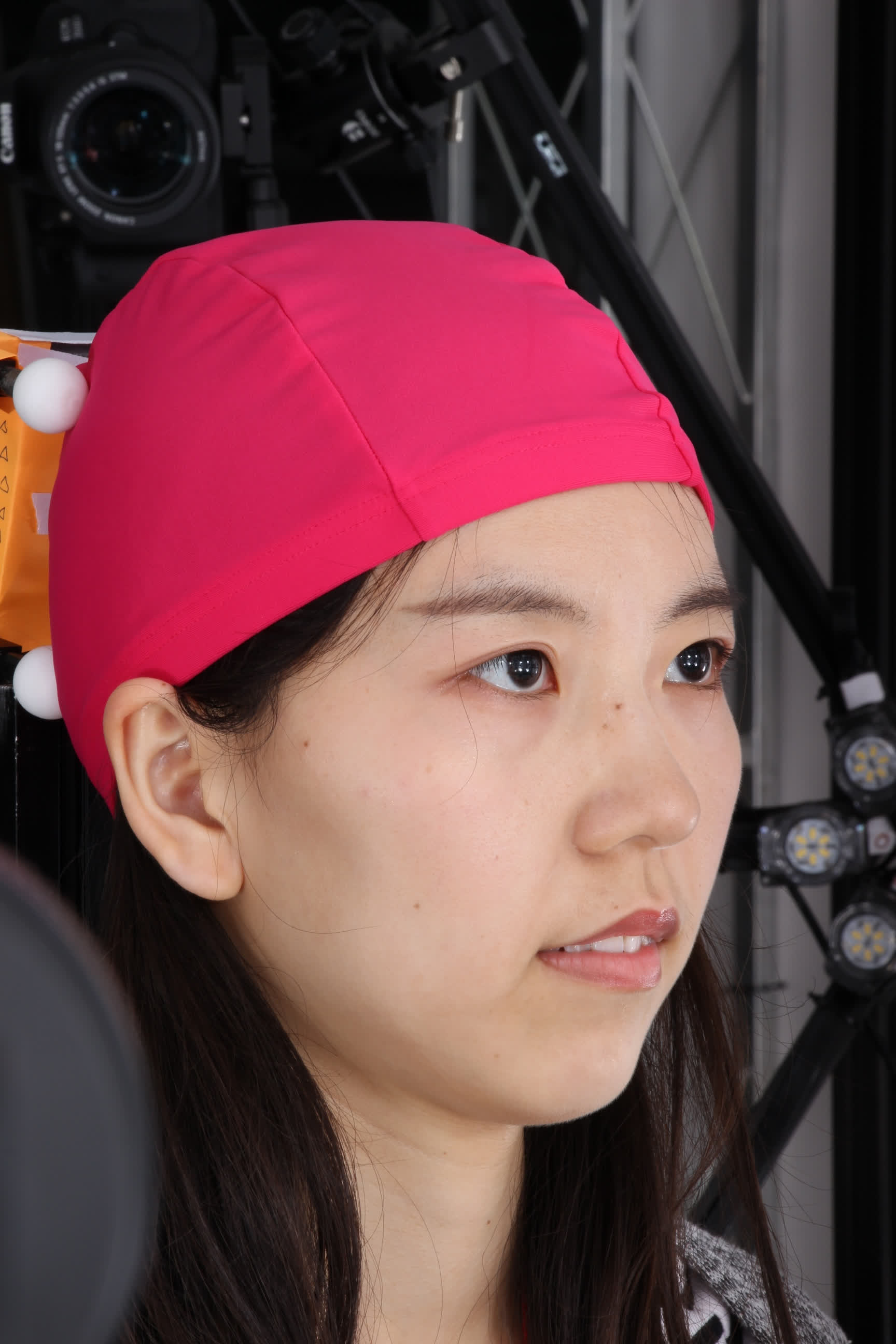

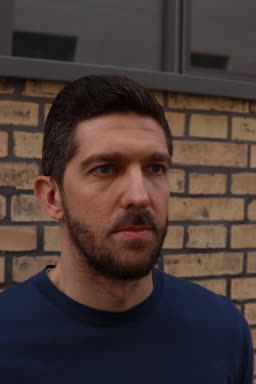

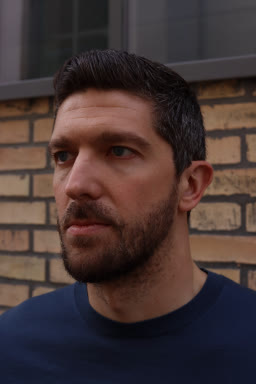

Given 3 views of a held-out test subject from our dataset, we show high-quality novel view synthesis. Note the 3D consistent rendering of details such as hair strands, eye-lashes, and view-dependent effects for example on the forehead.